My Journey Of Building Graphics Rendering Engine Using Vulkan SDK | Week 7

We are barely 3 weeks away from final presentation. Goals for this week were to complete Dear ImGui integration & Implement Projective Texture mapping.

Howdy Everyone! If you’re regular visitor and here to see my progress on this project, I am glad to see you here. If you’re new visitor, You might like to start from my first blogpost when I started working on this project from scratch. Thanks for visiting.

Allright, so in last 1:1, we observed that I’d implemented Point light but specular highlights weren’t upto the mark. And the reason is, I was using texture with no Alpha values. One of the nice thing about 4th color parameter is you can use it to store specular map for easier access. In the shader, I was trying to utilize 4th component ( w-component ) whereas texture didn’t had any 4th component for color. So I did imported texture in Photoshop and added Alpha channel which resolved point light rendering issue. Here are the tasks which I am going to discuss in more details.

- Complete Dear ImGui Integration in our engine.

- Implement Projective texture mapping

As I described in In last week’s blog post, we had imported 4 ImGui files from examples in our engine.

- imgui_impl_vulkan.h

- imgui_impl_vulkan.cpp

- imgui_impl_glfw.h

- imgui_impl_glfw.cpp

These 4 files takes care of setting up parallel Vulkan pipeline required for rendering ImGui UI. The way our example used to work is, we had set up our Renderpass while setting up pipeline and then we were just calling updateUniformBuffers() method per frame to pass transform & color data to shaders using uniform buffers. So we weren’t calling vkDrawCmdIndexed() method per frame rather we were directly depositing uniform buffers to shaders.

If you’ve looked into ImGui integration example, It recreates vulkan and glfw pipeline every draw call and it also records and submit command buffer every frame. This approach is different from our current approach.

So, to solve rendering issues, I visited multiple vulkan related forums where I found a comment by Sasha Willems ( Who has contributed a lot to Vulkan Community ) that you don’t need 2 render passes. You can have multiple pipelines, but single render pass. ( You can always have multiple sub passes for doing any post processing work ). So, I decided to move my command buffer recording method from createCommandBuffers() method to the draw call. Previously I was calling render pass 2 times which was problem behind implementation.

Link to This Project’s GitHub Repository

btw, Feel free to visit my GitHub repository to checkout my project and download code files.

You can go to “releases” section on my GitHub repo and download zip file under tag “Week_VII_Progress“. So You can look into the code which I’m referencing here.

Okay. Coming back to ImGui Setup, we need to call ImGui_ImplVulkan_Init() method which requires a custom struct composed of our host rendering pipeline objects as an input. One of the important thing to note is Descriptor Pool. We have to add new Combined Image Sampler to our descriptor pool in order to accommodate ImGui UI. Make sure you’ve increased count of “maxSets” by 1 as well.

Then while we are recording Command buffers in render pass, between begin and end render pass, we are calling our model’s vkCmdDrawIndexed(params) method and then we call ImGui_ImplVulkan_RenderDrawData(params) which internally calls ImGui’s vkCmdDrawIndexed(params) method. This is all you need to integrate ImGui in Vulkan. If everything else is setup correctly, you should be able to see ImGui window in overlay on the top of your screen.

Now, Lets move to the Projective Texture Mapping. So what is Projective Texture Mapping ? It is a technique for projecting 2D texture on the arbitrary geometry. First we need to add new class called Projector which is very similar to the Camera class. Projector is defined by a frustum and is used to generate texture coordinates to sample the projected image.

To Implement Projective Texture mapping, we need to add another combined image sampler to our descriptor pool, Create new Image buffer and memory, create descriptor sets, add and configure descriptors. This update workflow is common whether you want to add new Uniform buffer or Combined Image sampler. We have loaded the texture which we want to project in new buffer using stb_image library. This is exactly same like what we did before to load texture for our model.

Now we have updated pipeline, we need to update our UniformBufferObject to accommodate projectiveTextureMatrix which is calculated by multiplying Projector’s ViewProjection matrix and ProjectedTextureScalingMatrix followed by World matrix of model. Here is one new thing you might be wondering. What is this ProjectedTextureScalingMatrix ? We know that Texture UV range is [0 , 1] whereas the co-ordinates we are getting from ViewProjection matrix will be in normalized device co-ordinate space (NDC) whose range is [-1,+1]. So we need to use Scaling Matrix which will help us to transform object from local space into projective texture space using following equation.

u = 0.5x + 0.5 & v = -0.5x + 0.5

So now lets look at what’s changed in Vertex and Fragment Shaders.

For Vertex shader, we are passing ProjectedTextureScalingMatrix as a part of the Uniform Buffer who multiplies it with ObjectPosition to get projected texture coordinate and pass it to the fragment shader. That’s the only change in the Vertex shader.

For Fragment Shader, We have divided x & y component of the projected texture coordinate by its w component to transform position into NDC space. which is called as Homogeneous Divide. Then we use the new combined image sampler which we have bound to the shader to use Texture for Projective Mapping. We use it to get sampled color which we multiply with existing color output to get final color output.

Couple of important things to note here is, for our new Sampler’s address modes, we are using “VK_SAMPLER_ADDRESS_MODE_CLAMP_TO_BORDER” With border color “VK_BORDER_COLOR_INT_OPAQUE_WHITE” and Filter to be Linear. This is important because, Projective texture sampler can extend beyond the range [0,1] and white border provides the multiplicative identity for any such co-ordinates.

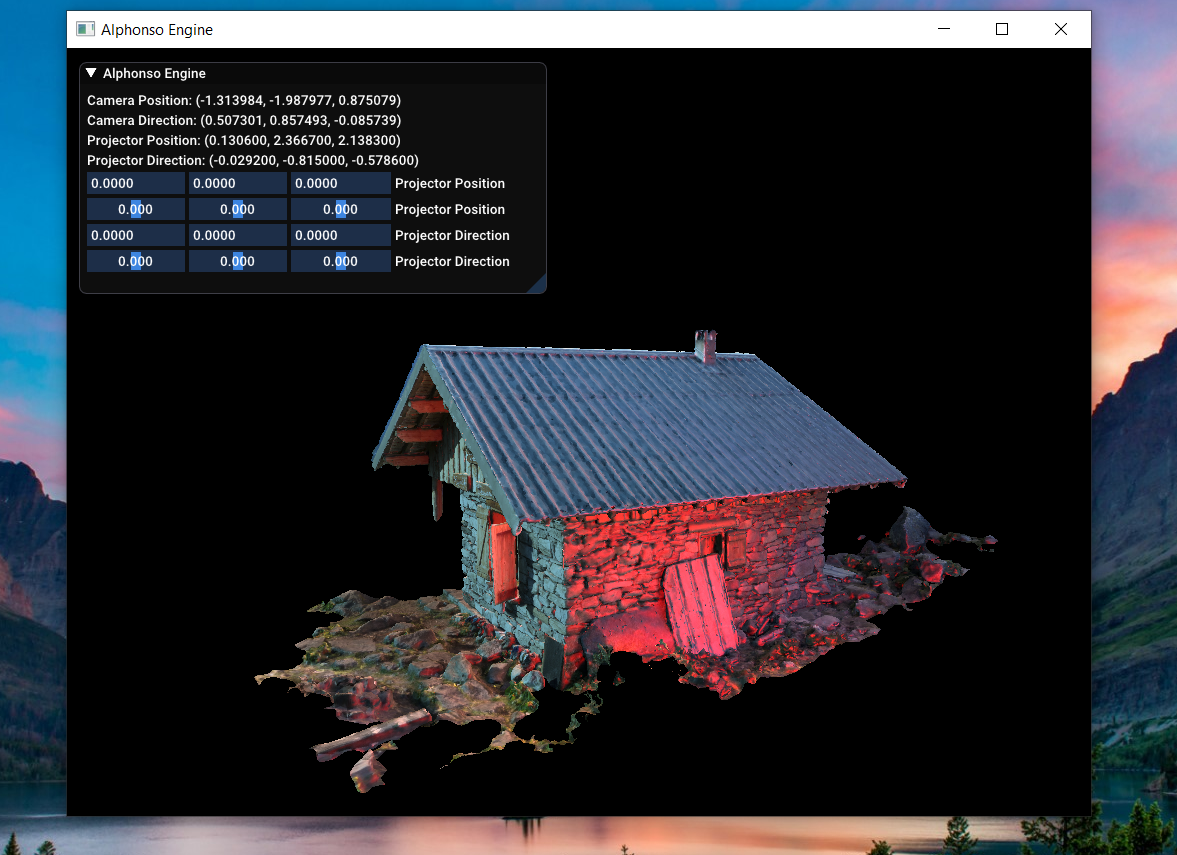

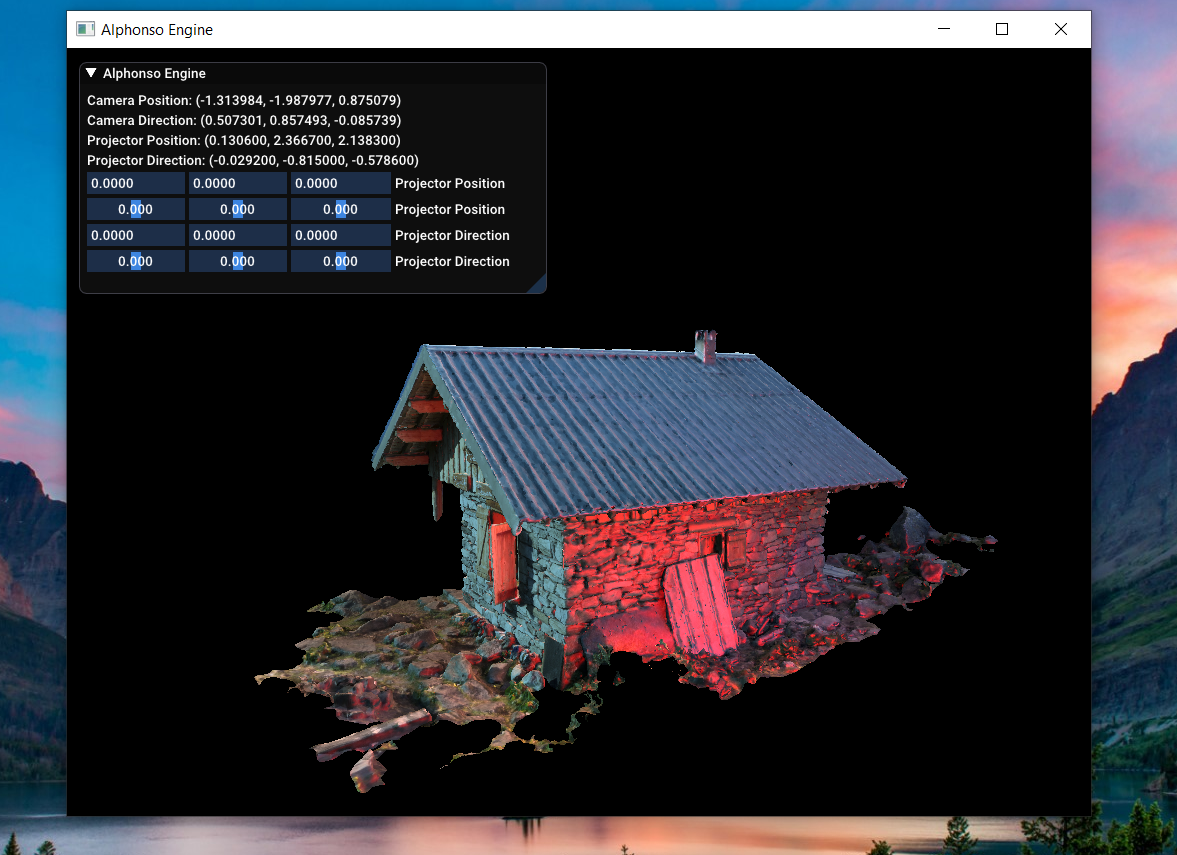

This is how our model looks like with point light ( After adding specular map through Alpha channel in Texture )

Next Week Task:-

For next week, I am going to resolve Projective Texture Mapping errors & try to add proxy objects for easier debugging before moving onto implementing Shadow mapping technique.

I hope that you’ve found this dev diary informative and useful. Feel free to comment down below on what do you think and also if you have any questions or requests, You’re most welcome! Stay Tuned!

Thanks for visiting. Have a great day ahead.. 🙂